With a Content Delivery Network (CDN) in place, every user fetches the data it needs from the nearest available network edge. A Global Server Load Balancing (GSLB) system is usually underlying this “magic”, which is essentially the same ol’ DNS, but armed with modern GeoDNS capabilities. Typically, GeoDNS works by taking the client’s source IP, looking it up in a GeoIP database that maps every IP to a geographical location, calculating the (aerial) distance between the client and every possible CDN edge and finally picking up the edge with the shortest distance. But how did we get to rely on source IP?

Given the current ubiquity of GPS and location-based services, it seems hard to believe that finding a user’s location and directing her to the optimal network edge is still a technical challenge worth posting about. Originally, the Internet was designed with the assumption that every resource is served from a single physical location. That may explain why DNS, the protocol used for discovering where a service is, was not designed as a “location-based service”. In those early days, the DNS server had no need to know the client’s location, so the protocol was not designed to convey it. That’s how we ended up using source IPs as an approximation to location.

Unfortunately, the GeoIP databases are guaranteed to be inaccurate even with constant updates. The Internet is constantly shifting, and no one is required to publish geographically accurate information. The collective maintainers are forced to go and mine public sources like Regional Internet Registries. In reality, nothing stops a US company from using a subset of its IP range in a European subsidiary. Cases like this could throw off GeoDNS. MaxMind, for example, a well-known vendor of GeoIP databases, claims “99.8% accuracy on a country level, 90% accuracy on a state level, 87% accuracy on a city level for the US within a 50 kilometer radius”. A mistake at the state level, could be as bad as sending a west-coast user to an east-coast server.

Even when GeoDNS has an accurate client location, it turns out that calculating the geographical distance between two points is just a poor approximation for their “network distance”. A better notion of “network distance” would be the “round trip time”, i.e the time it takes for a packet to go back and forth between the two points. Many factors play into the end-to-end round-trip we measure: the mobile network technology (3G, LTE, HSPA, etc), the peering relationships between different vendors, legacy hardware, transient conditions like congestion or outage, and so on. It often happens that the edge with the lowest latency is not the most geographically adjacent. If only the DNS server had this latency information…

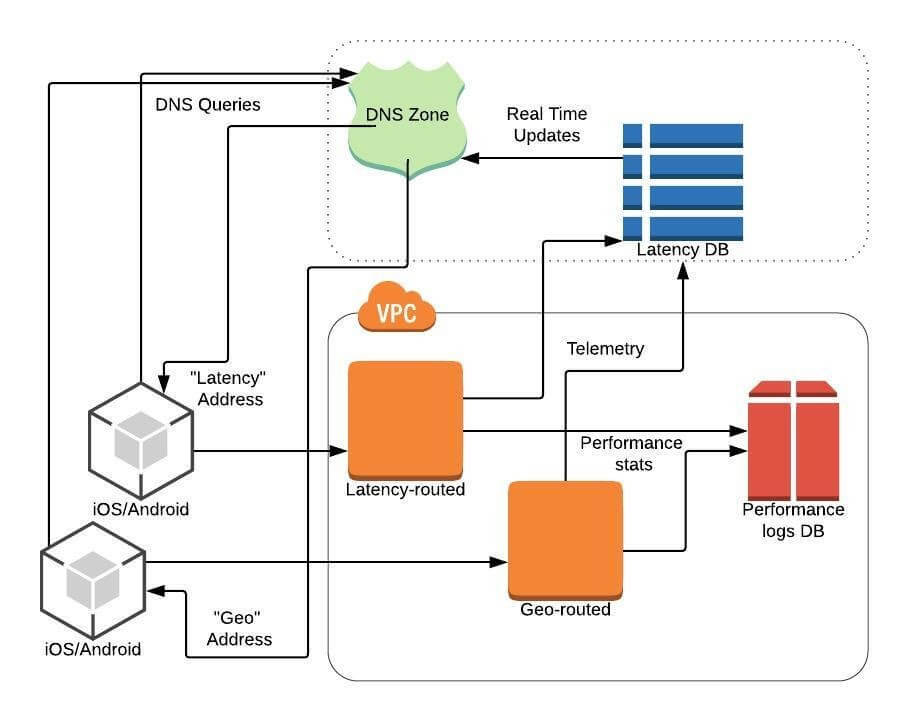

Luckily, NS1 has turned this wish into a reality: empowering GeoDNS by feeding it with real-time latency stats. The latency measuring component is typically called Real User Monitoring (RUM). You can have your own RUM system, which feeds the DNS server with your own stats, or you can leverage the “Community data”, which is basically anonymized stats collected by other customers. If you choose to implement your own telemetry beacon, then you have a lot of freedom in selecting your performance metric. It does not even have to be “latency”. It can be throughput, packet loss, cost or any other numerical metric. Whatever metric you choose to report will go into a dedicated database and have a real-time effect over the DNS decisions. A heat map visualization of this data comes in handy when you need a quick look at the global performance.

When the DNS server gets a query, it looks up all potential answers to the query — for example, all your network edges. Then it passes those answers through a "chain" of filters, each of which performs a simple operation on the list of answers (i.e. removing, sorting, and so on). Given a list of your network edges, you can sort them out according to your chosen metric and select the first one to be replied to the client. If the metrics database does not cover all the edges, it opts out and you just fall back to your classic GeoDNS.

This data-driven concept made immediate sense to us, here in the Salesforce’s Edge team. To begin with, we placed the huge variability seen at public networks at the center of our problem statement. We also suggested treating this variability as a “data problem”, which means that future network performance can be predicted using the past performance. Like any CDN, we observed the GeoDNS issues mentioned above and their performance implications. As mobile devices report their time-zone, it’s quite easy to detect DNS-misrouted sessions. One does not expect a user with a time-zone like “US/Pacific” to land on the Virginia edge. We created a table that maps every time-zone to the nearby edges. If a combination of edge+time-zone is not in this table, then it’s a “misrouted session”. We also tried redirecting these sessions to the correct edges. The penalty of redirection, especially when the full TLS handshake has to be repeated, is sometimes negating its benefits. A latency-driven DNS, on the other hand, improves the routing of sessions without adding any overhead in the process.

Being the data-driven team we are, we wanted to “A/B test” NS1’s Latency-driven DNS (a.k.a “Pulsar”) before deploying it widely. From the DNS perspective, it’s easy to setup the A/B test: you create two records, one with the Latency filter and one without. Then, you typically change your client code to resolve one of the names and tag the session as a Latency-driven/Geo-driven session accordingly. In our case, we wanted to avoid changing the mobile client, and instead drive everything from the server side. When the DNS server makes the A/B decision, that brings up an interesting challenge: how can we tell on the application side which name was used - the Latency-driven or the Geo-driven? This kind of information is not returned by the DNS server to the client. We ended up having the two names pointing to different sets of server IP addresses, so each server IP is either a Latency-driven address or a Geo-driven one. The metric that we chose to report in our telemetry beacon is the aggregated round-trip time (RTT) across all the client’s requests.

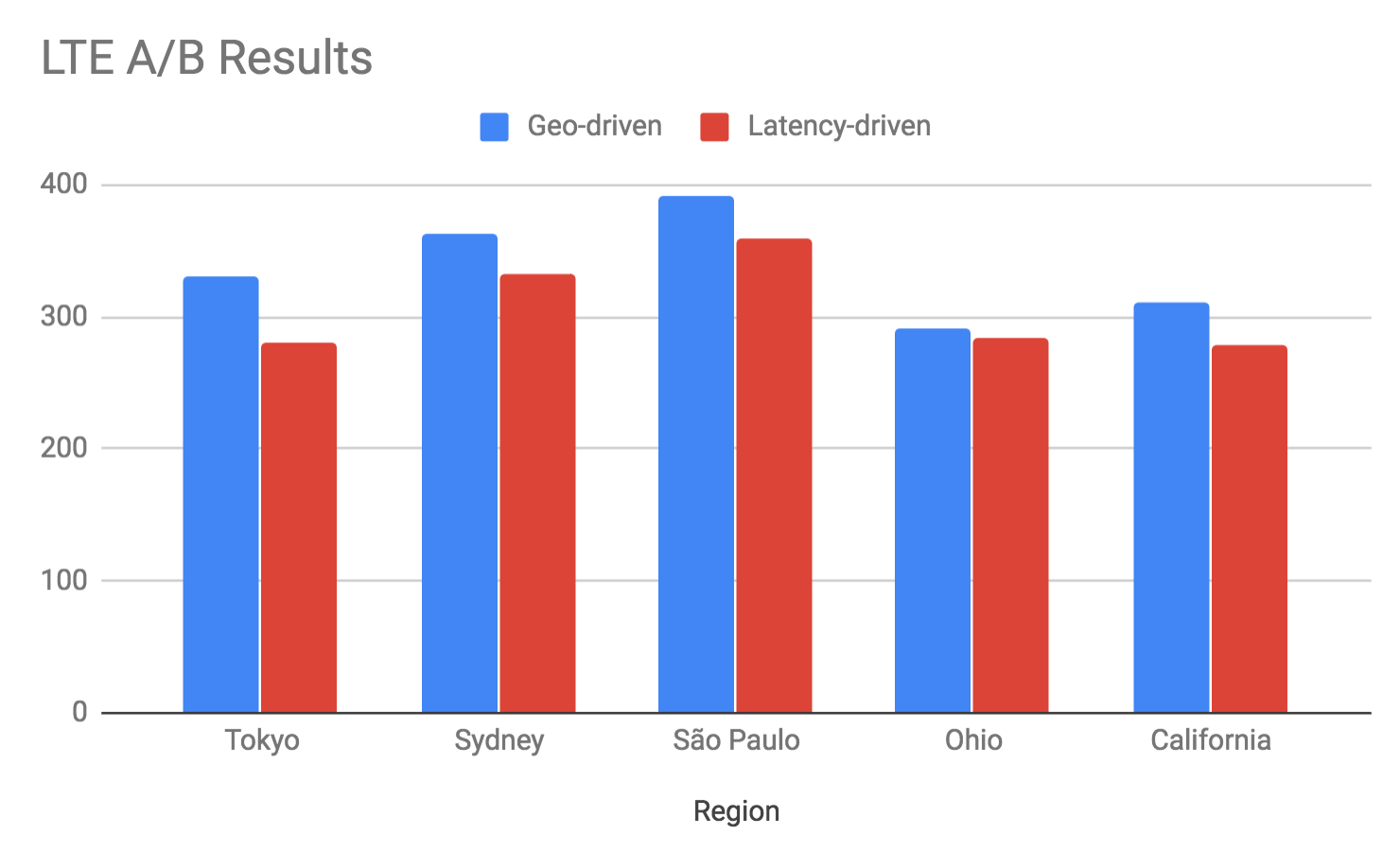

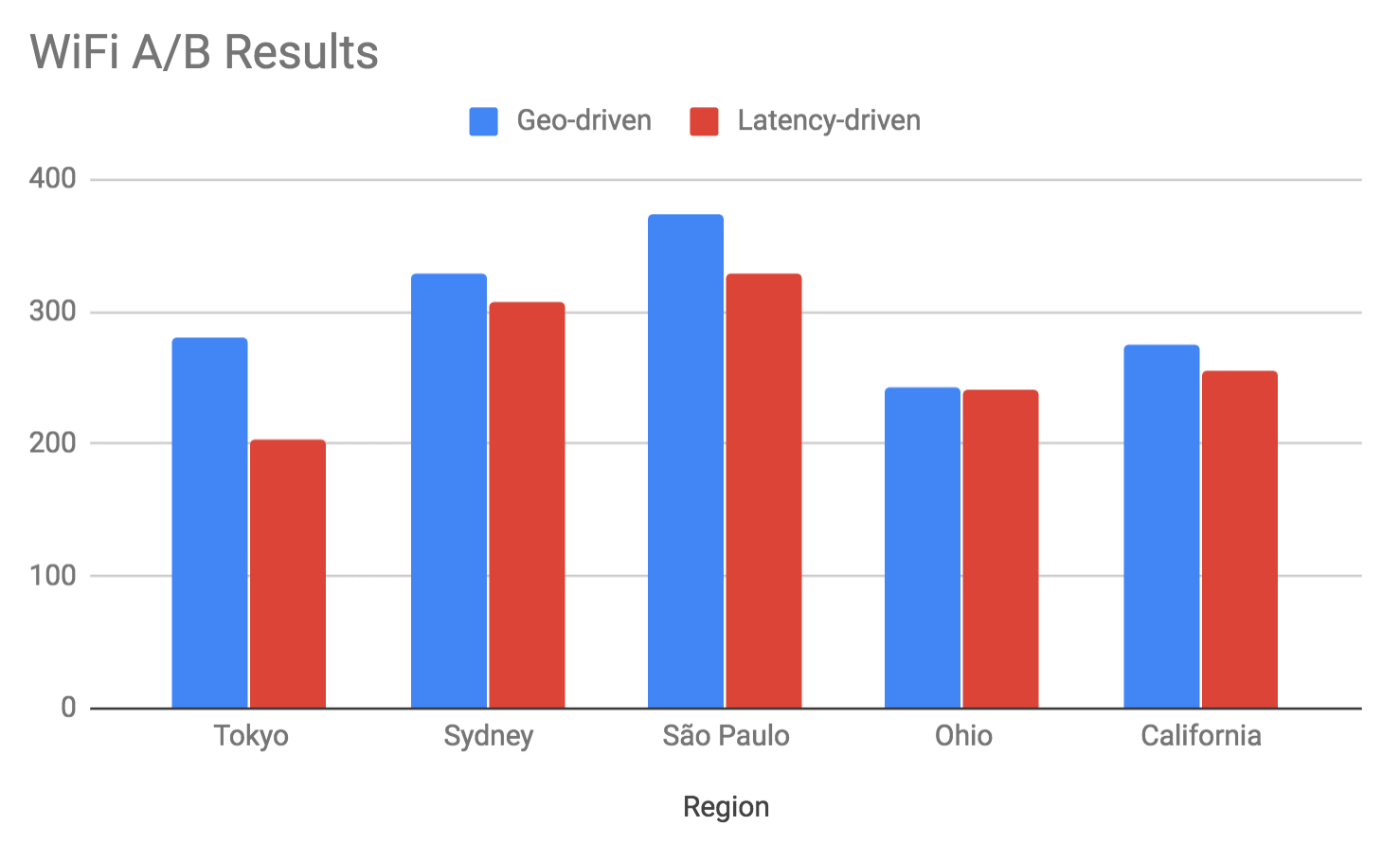

The A/B experiment ran for three weeks with all the stats recorded in our database. We’ve sliced the results according to network edge (AWS region) and network technology (LTE, Wifi, etc).

Over LTE, we observed that the time-to-first-byte has improved in 9-14% across all geographies:

Over Wifi, we observed the the time-to-first-byte has improved in 8-27% across all geographies:

We also looked at coast-to-coast misrouted sessions. With the Geo-driven DNS, 17% of the people served by the Virginia server are actually coming from California. With Latency-driven DNS, the share of the California group within the Virginia users is dropping to 9%.

We continue working closely with NS1 to realize the full potential of Pulsar. Remember: it’s not about the miles, it’s about the milliseconds!

This blog originally appeared on the Salesforce Engineering Blog